My Favorites This Week

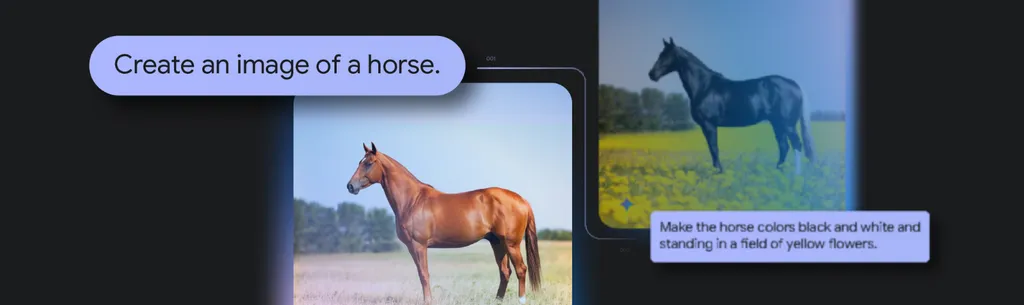

Gemini Native Image Generation

Mar 13, 2025

Gemini 2.0 Flash introduces native image generation, enabling contextual image creation, conversational edits, and long-text images—optimized for seamless chat interaction.

Google’s Gemma 3

Mar 12, 2025

Google’s Gemma 3 is Google’s latest set of lightweight, open AI models, built on Gemini 2.0 technology. Designed for speed and efficiency, they run directly on devices—from phones to workstations—empowering developers to build AI applications anywhere.

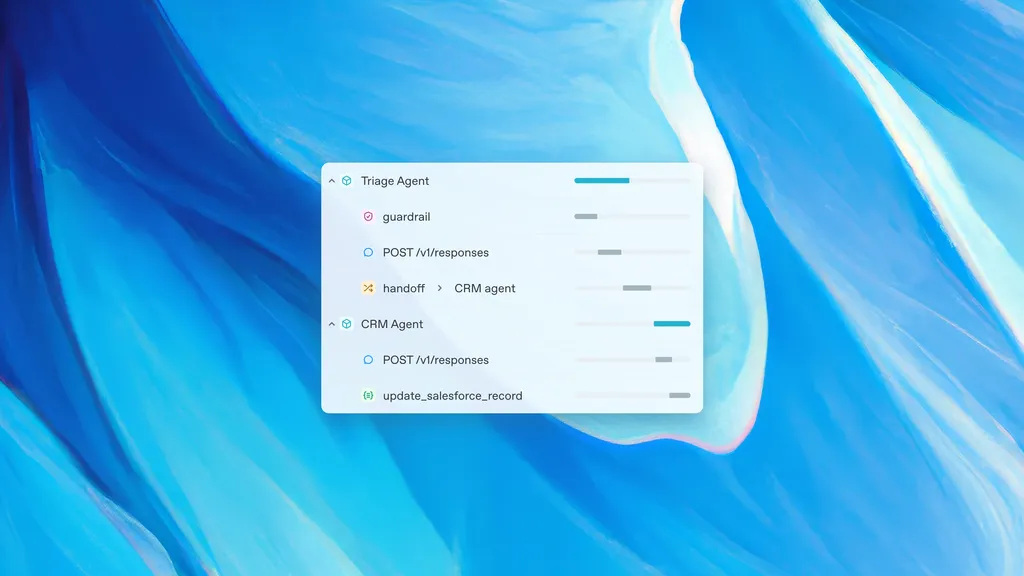

OpenAI’s Agents Building Tools

Mar 12, 2025

OpenAI introduced new APIs and tools to simplify agent development, including the Responses API, built-in web and file search, a new Agents SDK, and observability tools for workflow orchestration.

![]() OpenAI New tools for building agents

OpenAI New tools for building agents

Other Releases

Gemini 2.0 Flash Thinking Upgrades

Mar 13, 2025

Gemini 2.0 Flash Thinking boosts reasoning, expands app integration, and enables personalized AI. Key updates include free Deep Research, 1M token context, smarter task automation, and customizable AI experts (Gems).

Reka Flash 3 Reasoning

Mar 11, 2025

Reka Flash 3 is a 21B general-purpose reasoning model, trained from scratch with synthetic and public data. Optimized for low latency and on-device deployment, it rivals proprietary models like OpenAI o1-mini.

Reka Reka AI Reasoning with Reka Flash 3 | Reka

Hunyuan-TurboS Model

Mar 10, 2025

Hunyuan-TurboS is the first ultra-large Hybrid-Transformer-Mamba MoE model, combining Mamba’s efficient long-sequence processing with Transformer’s strong contextual understanding. It overcomes traditional Transformer limitations in long-text training and inference.

Twitter Hunyuan on Twitter / X

👏 Thanks for reading!